Praktikum Robotik - Projekte

Project 1: Signed-distance function for mapping and tracking with RGB-D cameras (1-2 students)

Contact: Daniel MaierMapping and localization are fundamental problems in robot navigation. Nowadays, RGB-D are very popular sensors for this purpose in the robotics community as they provide dense 3D data directly from the hardware. However, for constructing and maintaining a world model, the pose of the camera still has to be estimated and compact representation of the vast amount of provided data has to be found. The signed-distance function (SDF) provides an accurate but efficient way to represent the world. Based on this representation, the current pose of the camera can be retrieved from its readings using optimization. A visual rendering of the reconstructed world can be computed using meshing algorithms like marching cubes. In this project, a recent algorithm for camera tracking and 3D reconstruction using SDFs will be implemented. The project requires a good understanding of computer vision (i.e., analytical geometry, matrix calculus and some algebra). Experience with GPU programming would be of advantage, too.

Project 2: Visual tracking for humanoid navigation (1-2 students)

Contact: Daniel MaierThe project's aim is to implement a visual object tracker for monocular cameras. Object tracking is particularly important for tasks requiring high precision like stair climbing or manipulation. Tracking allows the robot to estimate its current pose relative to an object and approach it. The algorithm developed in this project should be able to cope with different types of objects like stairs or boxes. Subsequently, the robot could climb the approached object or grasp it. The project requires a fair understanding of computer vision (i.e., analytical geometry, matrix calculus and some algebra).

![]()

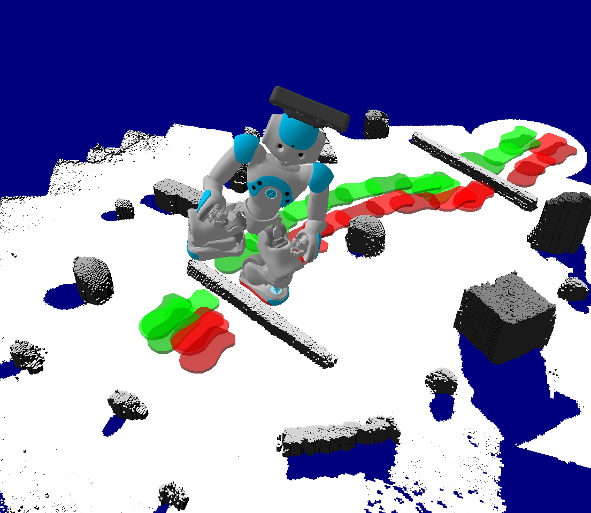

Project 3: Moving Obstacle/Robot Detection and Tracking (1-2 students)

Contact: Dr. Christian Dornhege, Dr. Andreas HertleDuring a robot competition multiple adversarial robots operate in the same environment. Collision with objects or other robots are not desirable and might lead to disqualification. Most object avoidance algorithms assume a static world. However, if robots are moving fast, this is insufficient. The goal of this project is to develop a robot (moving object) tracker that deals with fast moving robots while the robot itself is moving at speed. We will use a laser range scanner to gain high frequency high resolution distance data. By localizing ourselves we will determine which parts of the world are static as they don't change. The remaining objects will be identified and tracked. The project requires a fair understanding of mapping and localization with laser scanners.

Project 4: Elliptical shape detection from rgb camera images (1-2 students)

Contact: Dr. Andreas Hertle, Dr. Christian DornhegeUsually robots rely on distance measurement sensors like laser range finder or kinect cameras for orientation and collision avoidance. However, some tasks require detecting distinct visual shapes in the environment. For the upcoming Sick Robot Day competition the robot has to identify and approach loading stations. The stations are marked with concentric black rings on white background. These rings need to be detected reliably from various angles and under changing lighting conditions. Additionally, a 3D pose for the robot to approach needs to be estimated from consecutive detections. The same algorithm can also be used for solving independent problems like estimating the center of an elliptical environment and should thus be implemented generically.